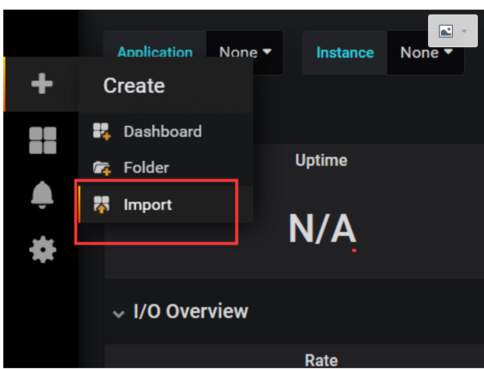

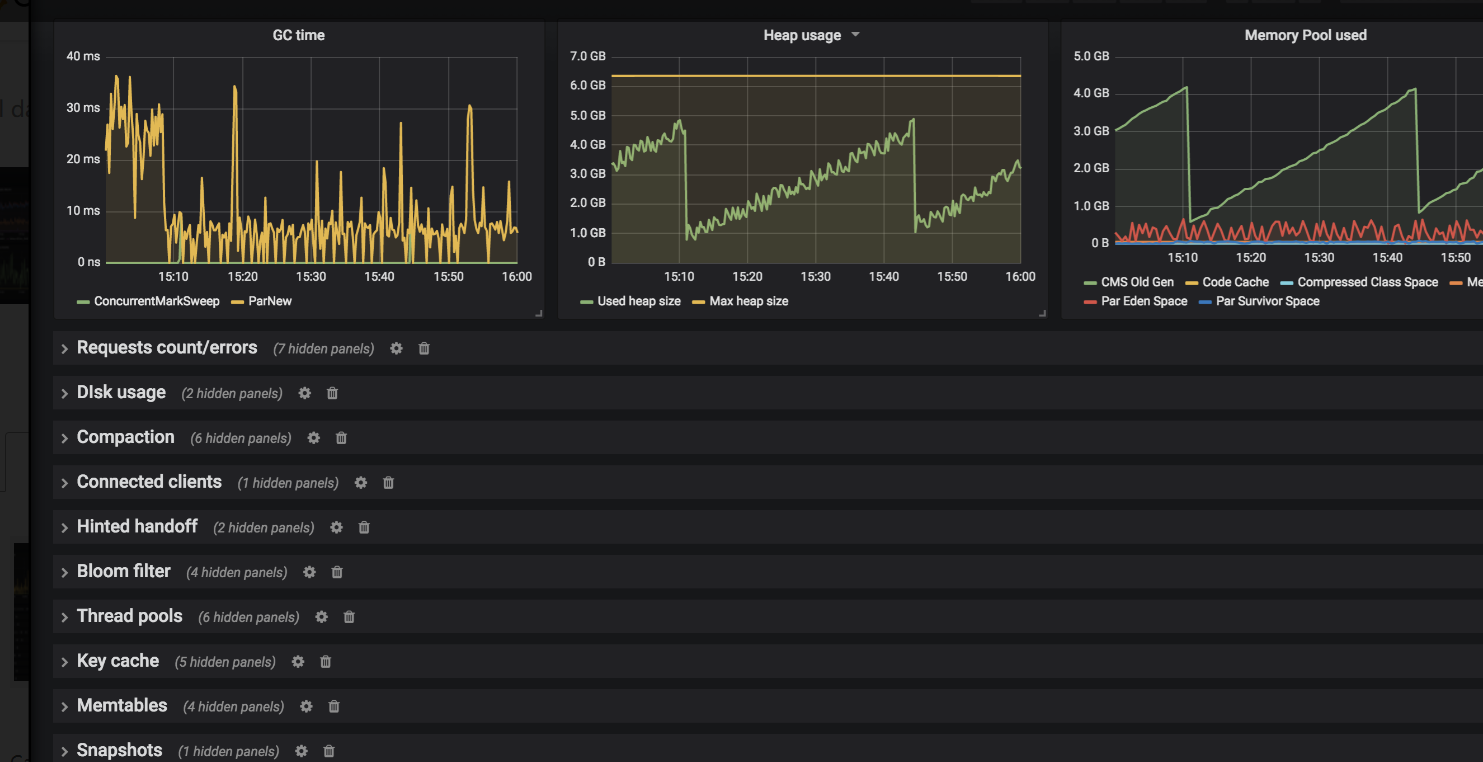

This reporter took a snapshot from MetricRegistry every minute, and pushed them, after batching, into a local kafka cluster. The architecture at this time was composed of Taboola services, who reported their metrics using a new MetrictankReporter component. It receives various inputs (carbon/kafka, etc.).īut mainly we chose Metrictank because it’s compatible with the hierarchical Graphite syntax – thus we didn’t have to refactor our current dashboards to work with the new key-value notation.It’s Multi-tenant – thus, we could share our content with it.It is C* backend – which is known to be very stable and we were experienced with it, from other data paths.We chose Metrictank, because of the following reasons: The new requirement was : 100 million metrics per minute (!), and we knew the current architecture would not be able to scale enough to support this.

Moreover, the RabbitMq cluster wasn’t well monitored, and it was complicated to maintain.Īt this point, we supported 20 million metrics per minute, and we wanted more. We started to suffer from metrics data loss, when the carbon-cache nodes crashed due to out of memory, or when the disk space (used by Whisper) ran out. In addition, we used different kinds of carbons for caching and aggregations. The cluster of carbons became big and complex. When we wanted to scale horizontally – we added additional Carbon, Graphite-web and Whisper DB nodes. This is how our primary architecture looked: Then, the Graphite web app read the metrics from Whisper, and made them available for Grafana to read and display. The carbons are part of Graphite’s backend, and are mainly responsible for writing the metrics quickly to the disk (in a hierarchical file system notation) using Whisper. We used a Graphite Reporter component to get a snapshot of metrics from MetricRegistry (a 3rd party collection of metrics belonging to dropwizard that we used) every minute, and sent them in batches to RabbitMq for the carbon-relays to consume. We started with a basic metrics configuration of Graphite servers. Our publishers and advertisers increase exponentially, thus our data increases, leading to a constant growth in metrics volume. In the past two years, we moved from supporting 20 million metrics/min with Graphite, to 80 million metrics/min using Metrictank, and finally to a framework that will enable us to grow to over 100 million metrics/min, with Prometheus and Thanos. In this blogpost I will describe how we, at Taboola, changed our metrics infrastructure twice as a result of continuous scaling in metrics volume.

0 kommentar(er)

0 kommentar(er)